diff --git a/lab01/README.md b/lab01/README.md

index d854cfe..4176394 100644

--- a/lab01/README.md

+++ b/lab01/README.md

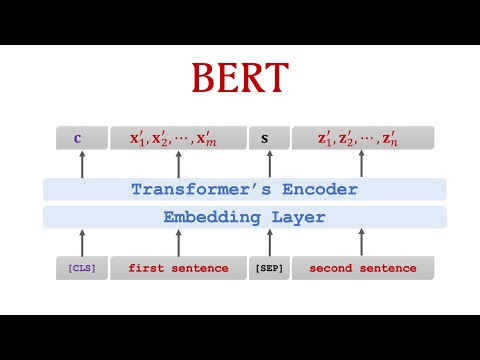

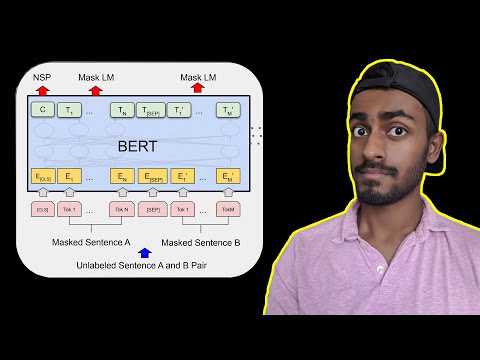

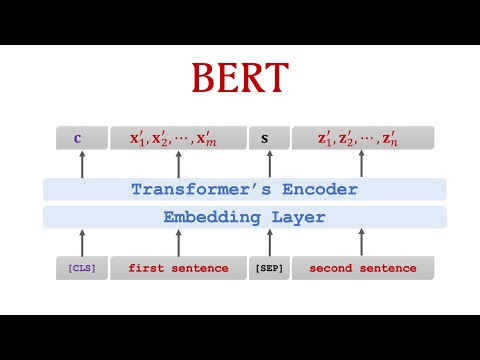

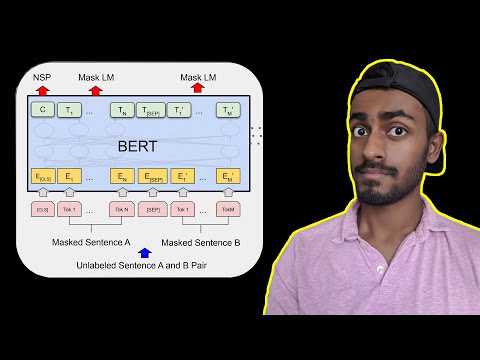

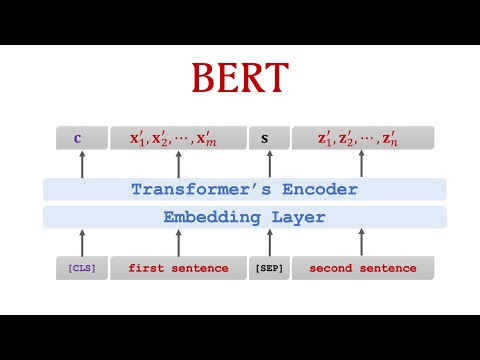

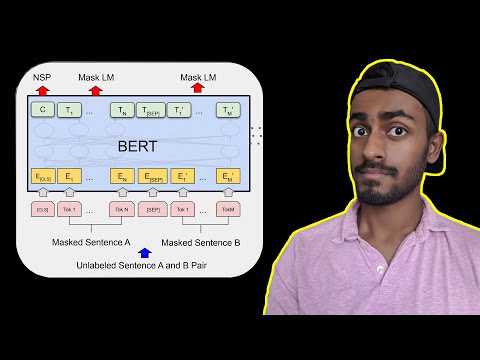

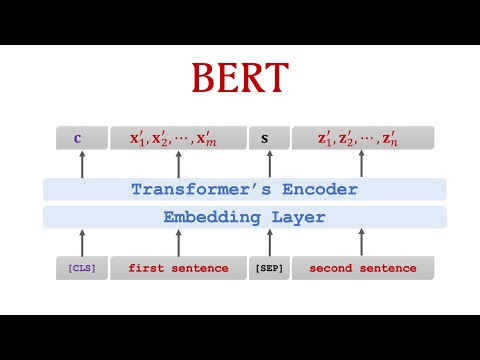

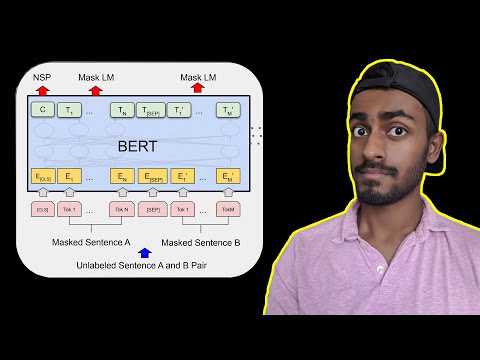

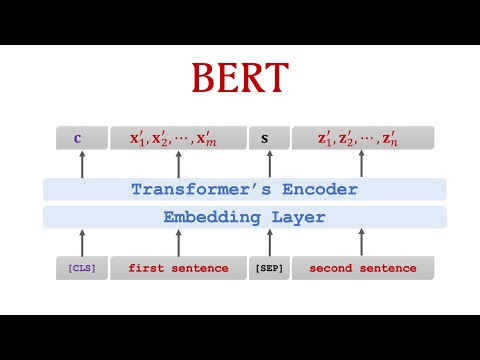

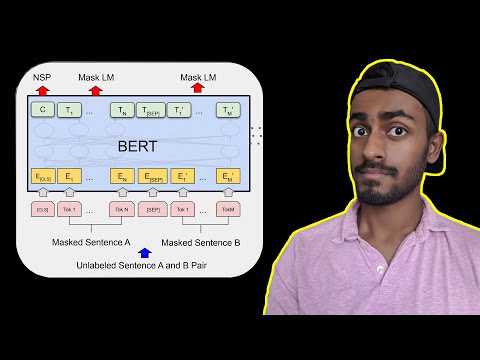

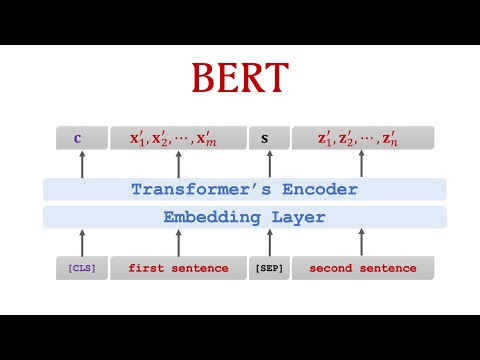

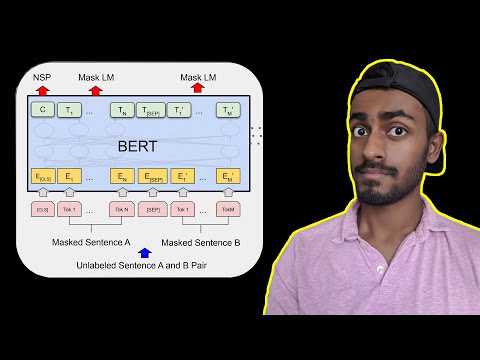

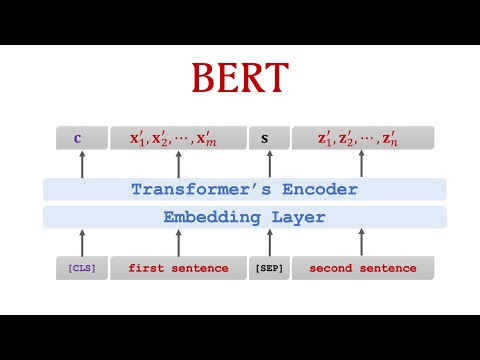

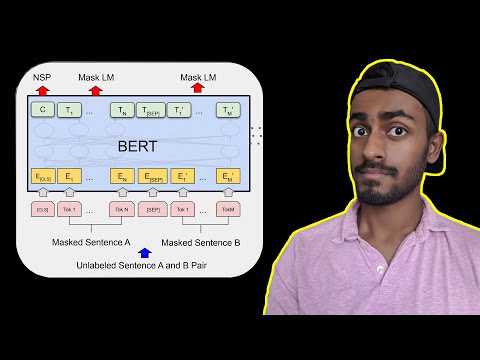

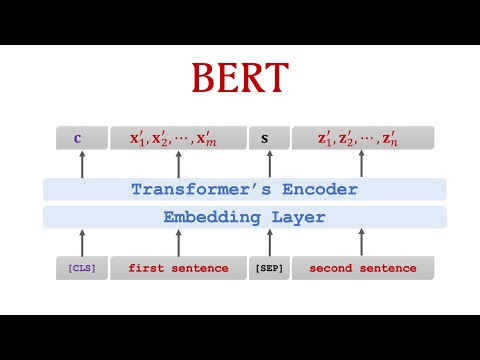

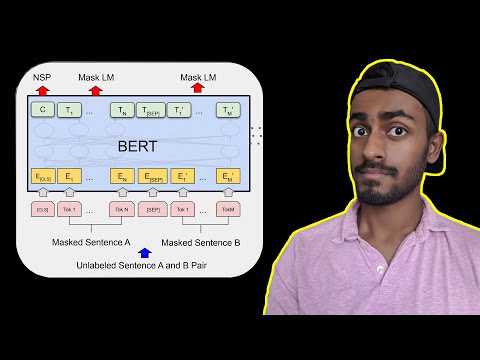

@@ -121,12 +121,6 @@ We use a pre-trained AI model called **BERT (Bidirectional Encoder Representatio

### How BERT Works

- -

-[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

-

-[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

-

BERT reads the entire sentence at once and uses a mechanism called **self-attention** to focus on important words. For example:

```

@@ -134,6 +128,12 @@ Sentence: "The bank was robbed."

BERT understands that "bank" refers to a financial institution because of the word "robbed."

```

+

-

-[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

-

-[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

-

BERT reads the entire sentence at once and uses a mechanism called **self-attention** to focus on important words. For example:

```

@@ -134,6 +128,12 @@ Sentence: "The bank was robbed."

BERT understands that "bank" refers to a financial institution because of the word "robbed."

```

+ +

+[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

+

+[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

+

### Why Use BERT?

- **Pre-trained**: BERT has already learned from millions of sentences, so it understands language well.

+

+[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

+

+[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

+

### Why Use BERT?

- **Pre-trained**: BERT has already learned from millions of sentences, so it understands language well.

-

-[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

-

-[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

-

BERT reads the entire sentence at once and uses a mechanism called **self-attention** to focus on important words. For example:

```

@@ -134,6 +128,12 @@ Sentence: "The bank was robbed."

BERT understands that "bank" refers to a financial institution because of the word "robbed."

```

+

-

-[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

-

-[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

-

BERT reads the entire sentence at once and uses a mechanism called **self-attention** to focus on important words. For example:

```

@@ -134,6 +128,12 @@ Sentence: "The bank was robbed."

BERT understands that "bank" refers to a financial institution because of the word "robbed."

```

+ +

+[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

+

+[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

+

### Why Use BERT?

- **Pre-trained**: BERT has already learned from millions of sentences, so it understands language well.

+

+[](https://www.youtube.com/watch?v=EOmd5sUUA_A)

+

+[](https://www.youtube.com/watch?v=xI0HHN5XKDo)

+

### Why Use BERT?

- **Pre-trained**: BERT has already learned from millions of sentences, so it understands language well.